Anyway, instead of

writing something reactionary and vitriolic like every other journalist,

I decided to sleep on it. Now, after a night of vivid fever dreams (and

more scenes involving a topless Zuckerberg than I initially

anticipated), I can tell you that I’ve seen the future of Facebook,

Oculus Rift, and virtual reality — and it’s pretty damn awesome.

One wonders what Carmack’s long-term plans are, after being acquired by Facebook

Be patientFirst,

it’s important to remember that, in the short term, the Oculus Rift is

unlikely to be negatively affected by this acquisition. According to Oculus VR co-founder Palmer Luckey,

thanks to Facebook’s additional resources, the Oculus Rift will come to

market “with fewer compromises even faster than we anticipated.” Luckey

also says there won’t be any weird Facebook tie-ins; if you want to use

the Rift as a gaming headset, that option will still be available.Longer-term, of course, the picture is a little murkier. Zuckerberg’s post explaining the acquisition

makes it clear that he’s more interested in the non-gaming applications

of virtual reality. “After games, we’re going to make Oculus a platform

for many other experiences… This is really a new communication

platform… Imagine sharing not just moments with your friends online, but

entire experiences and adventures.”

Facebook for your face: An Oatmeal comic that successfully predicted Facebook’s acquisition some months ago.

|

| |

Second Second LifeUltimately,

I think Facebook’s acquisition of Oculus VR is a very speculative bet

on the future. Facebook knows that it rules the web right now, but

things can change very, very quickly. Facebook showed great savviness

when it caught the very rapid consumer shift to smartphones — and now

it’s trying to work out what the Next Big Thing will be. Instagram,

WhatsApp, Oculus VR — these acquisitions all make sense, in that they

could be disruptive to Facebook’s position as the world’s most important

communications platform.While you might just see the Oculus Rift

as an interesting gaming peripheral, it might not always be so. In

general, new technologies are adopted by the military, gaming, and sex

industries first — and then eventually, as the tech becomes cheaper and

more polished, they percolate down to the mass market. Right now, it’s

hard to imagine your mom wearing an Oculus Rift — but in five or 10

years, if virtual reality finally comes to fruition, then such a

scenario becomes a whole lot more likely.

Who wouldn’t want to walk around Second Life with a VR headset?

For

me, it’s easy to imagine a future Facebook where, instead of sitting in

front of your PC dumbly clicking through pages and photos with your

mouse, you sit back on the sofa, don your Oculus Rift, and walk around

your friends’ virtual reality homes. As you walk around the virtual

space, your Liked movies would be under the TV, your Liked music would

be on the hi-fi (which is linked to Spotify), and your Shared/Liked

links would be spread out on the virtual coffee table. To look through

someone’s photos, you might pick up a virtual photo album. I’m sure

third parties, such as Zynga and King, would have a ball developing

virtual reality versions of

FarmVille and

Candy Crush Saga.

Visiting fan pages would be pretty awesome, too — perhaps Coca-Cola’s

Facebook page would be full of VR polar bears and and happy Santa

Clauses, and you’d be able to hang out with the VR versions of your

favorite artists and celebrities too, of course.And then, of

course, there are all the other benefits of advanced virtual reality —

use cases that have been bandied around since the first VR setups back

in the ’80s. Remote learning, virtual reality Skype calls, face-to-face

doctor consultations from the comfort of your home — really, the

possible applications for an advanced virtual reality system are endless

and very exciting.But of course, with Facebook’s involvement,

those applications won’t only be endless and exciting — they’ll make you

fear for the future of society as well. As I’ve written about

extensively in the past, both Facebook and Google are very much in the

business of accumulating vast amounts of data, and then monetizing it. Just last week, I wrote about Facebook’s facial recognition algorithm

reaching human levels of accuracy. For now, Facebook and Google are

mostly limited to tracking your behavior on the web — but with the

advent of wearable computing, such as Glass and Oculus Rift, your

real-world behavior can also be tracked.And so we finally reach

the crux of the Facebook/Oculus story: The dichotomy of awesome,

increasingly powerful wearable tech. On the one hand, it grants us with

amazingly useful functionality and ubiquitous connectivity that really

does change lives. On the other hand, it warmly invites corporate

entities into our private lives. I am very, very excited about the

future of VR, now that Facebook has signed on — but at the same time,

I’m incredibly nervous about how closely linked we are becoming to our

corporate overlords.

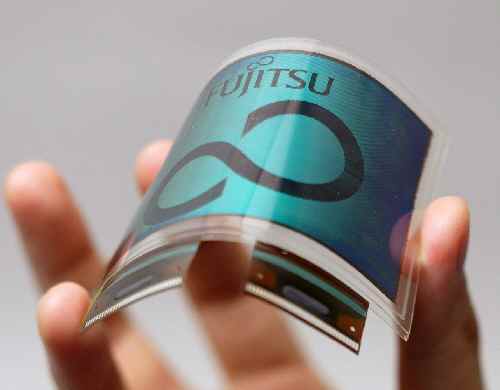

An e-ink screen showing the "ghost" of a prior image

An e-ink screen showing the "ghost" of a prior image

About

About Tags

Tags Popular

Popular Google+

Google+